AI agents operate in dynamic environments where data relevance, context, sensitivity, and intent can shift from moment to moment. This reality places new demands on governance. Traditional control models cannot provide the precision or adaptability required when autonomous systems take actions, make decisions, and mobilize data in real time. Knowledge Based Access Control, often called KBAC, introduces a control approach designed for this new landscape and supports secure, contextual, and compliant behaviour for AI agents.

Why coarse grained controls and static decisions are not enough

Enterprise control frameworks were built for systems that move slowly. Roles, hierarchies, static attributes, and broad entitlements create an administrative layer that works for predictable access patterns. AI agents break this model. They operate across domains, combine data sources with different sensitivity levels, and initiate actions based on observations rather than predefined workflow steps. A static decision made at the start of a session does not hold up when the agent’s context changes, or when the meaning of a data element depends on its relationship to another source.

Coarse policies also mask risk. They authorize wide ranges of actions without understanding lineage, provenance, or the situational meaning of the data involved. This restricts the safe use of AI inside enterprises because it cannot prevent inappropriate retrievals or subtle policy violations that only become apparent when data is combined. Enterprises need controls that shift with the state of the world, the state of the agent, and the exact knowledge being requested. This is where KBAC becomes essential.

Why agents need knowledge based access control

Agents make decisions based on knowledge, not static entitlements. They reason over the information available to them and select the next step in a chain of actions. This means the access control system must reason in the same way. KBAC evaluates relationships, metadata, provenance information, contextual attributes, and runtime signals. It does not rely on the role of an actor or a static attribute attached to a token. It evaluates whether the knowledge being requested should be available at this specific moment, under these conditions, for this agent.

This creates a governance model that aligns with the nature of AI. The agent’s scope is shaped by the knowledge it is allowed to retrieve, the contextual conditions around that retrieval, and the policies recorded in metadata. Sensitive data can be marked with consent restrictions, allowed uses, jurisdictional rules, and lifecycle status. KBAC uses these elements to determine if the request is compliant. The result is a control layer that supports autonomy without loss of oversight and maintains safety even when agents adapt their behaviour.

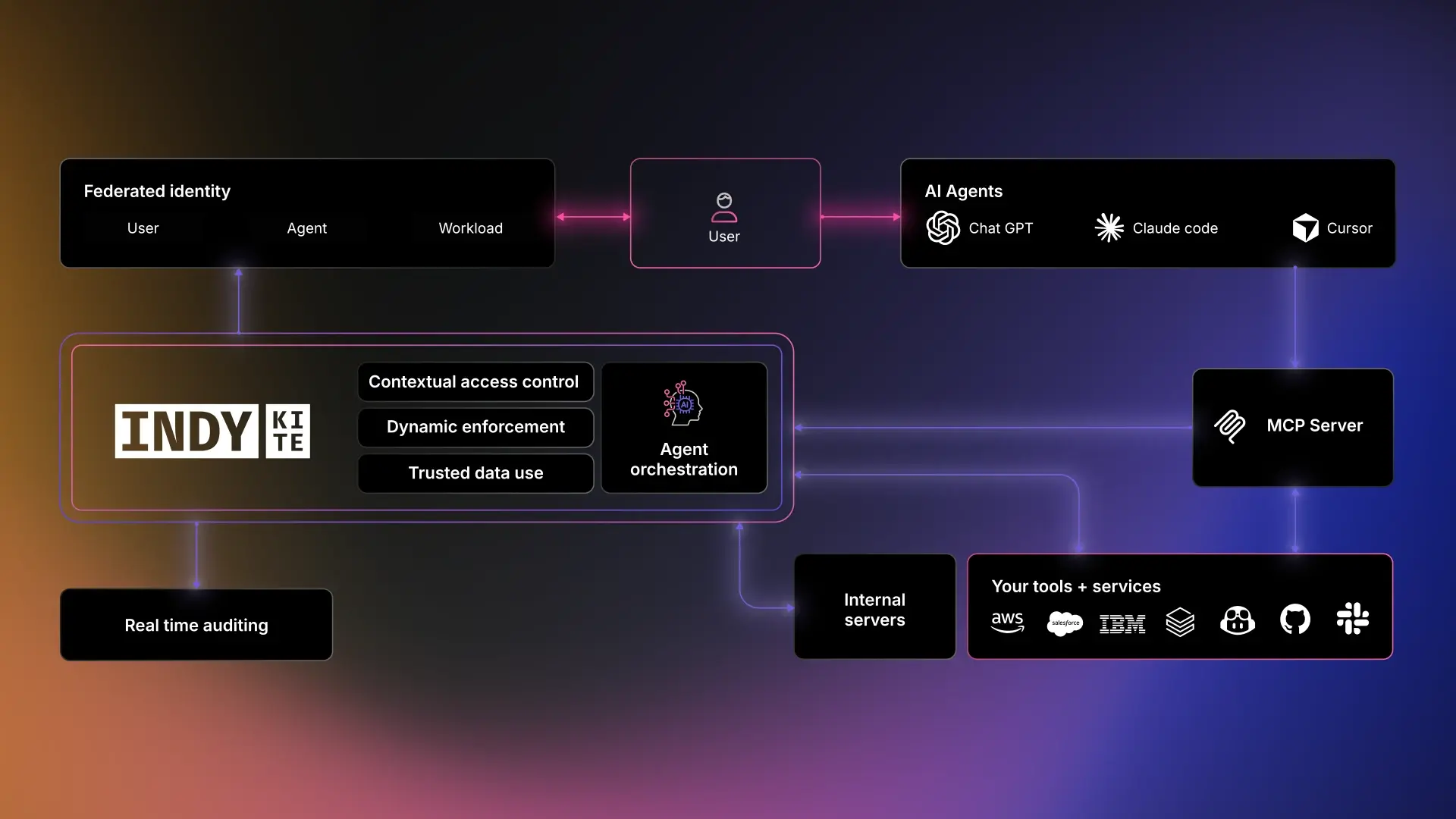

How KBAC works with MCP

The Model Context Protocol provides a structured way for agents to interact with external tools and data sources. It defines how an agent requests information, retrieves context, and executes actions. KBAC extends this with a decision fabric that evaluates each request against the full body of knowledge about the data. As an MCP tool provider exposes functions, KBAC sits between the agent and the data to verify whether the requested knowledge is permissible.

Each MCP call becomes a point of evaluation. The KBAC engine checks provenance, metadata, policy rules, contextual signals, and trust scores. It determines whether the agent can retrieve the specific knowledge fragment or whether the request needs to be filtered, transformed, or denied. The agent receives only the information it is allowed to act upon, which means downstream actions are shaped by compliant knowledge from the outset.

This pairing provides a strong governance and security model for enterprise AI agents. MCP structures the operational flow. KBAC ensures that every step is grounded in verified, policy aligned knowledge. The combination supports safe automation, controlled reasoning, and predictable behaviour at scale.